Process

This page showcases the love of our labour from start to finish. From inspiration to ideation, our deepfake journey to video editing, we went through a lot of experimentation to produce an experience we are really proud of.

Our creative challenge draws inspiration from analogue horror and aims to celebrate ShallowFakes as a creative medium. It also hopes to question the state of media culture as AI-technologies become increasing available.

Visual Inspiration

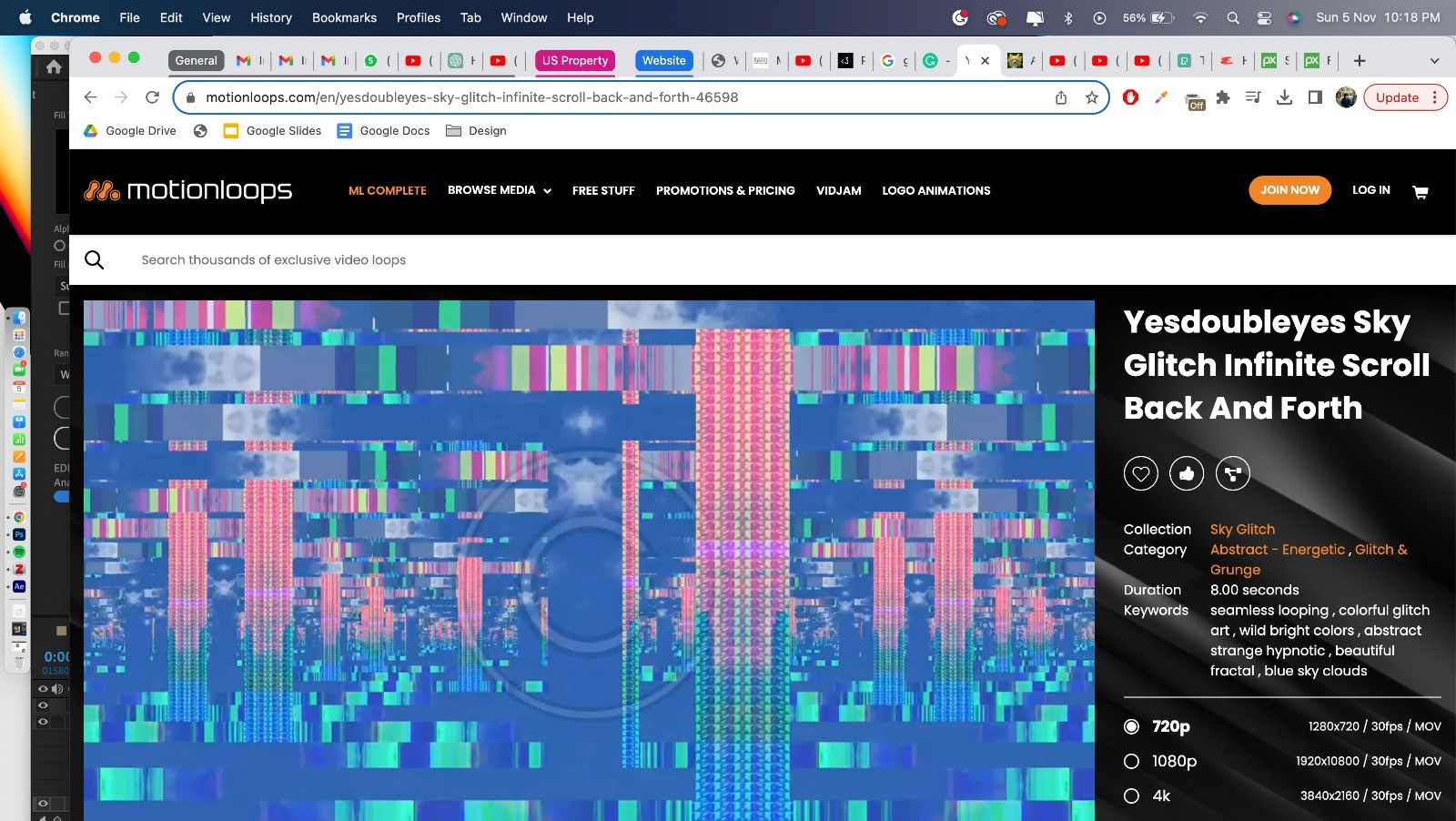

We referenced contemporary glitches to support a gradual disruption of our artefact. Drawing inspiration from Tame Impala concert visuals and the Ronald Reagan SCP file (see below), we explored and imagined glitches in the format of a phone.

Motion Tile function is one effect explored to create what we call "Infinite Scroll Glitch" to mimic phone user's behaviour. Another exploration is Datamoshing, which was critical in making a steady build-up to attract and engage the audience.

All in all, these components helped us imagine a gradual disruption in the format of a phone. They not only add a realistic and dynamic dimension to our visual but also support our video's story.

Ideation

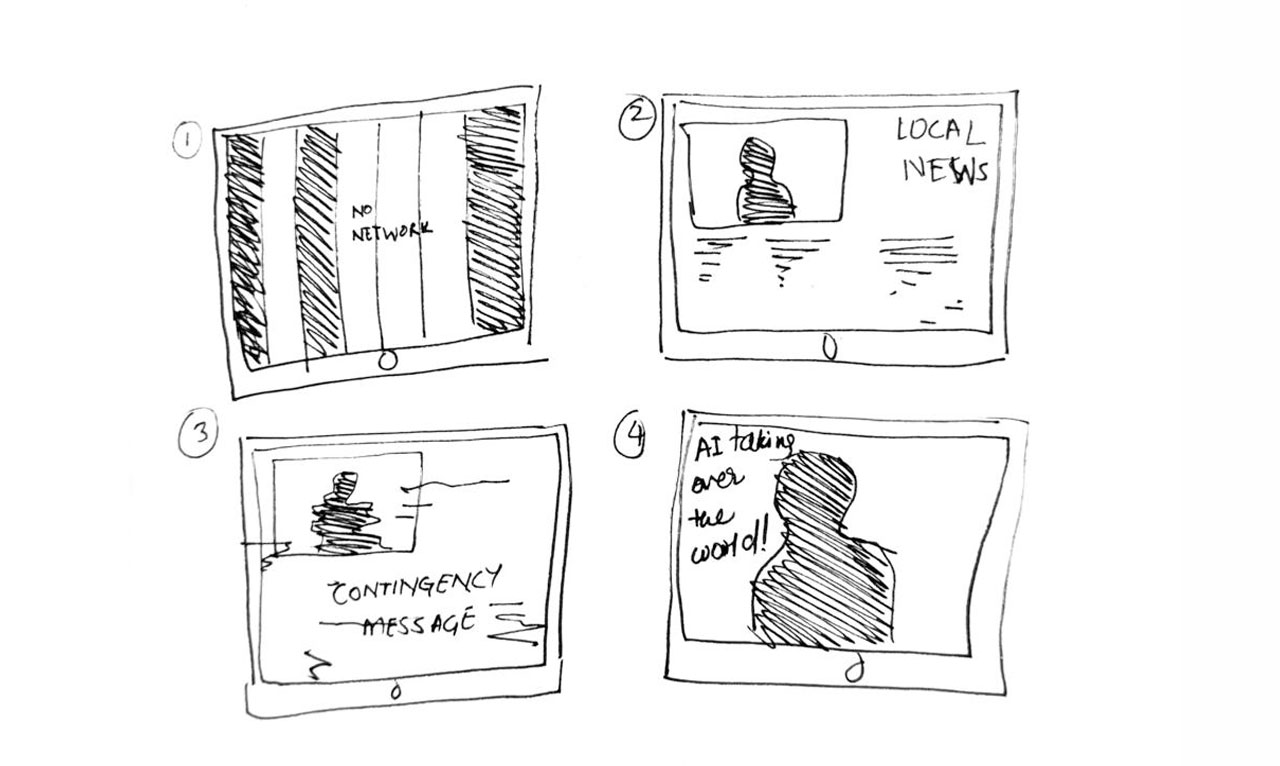

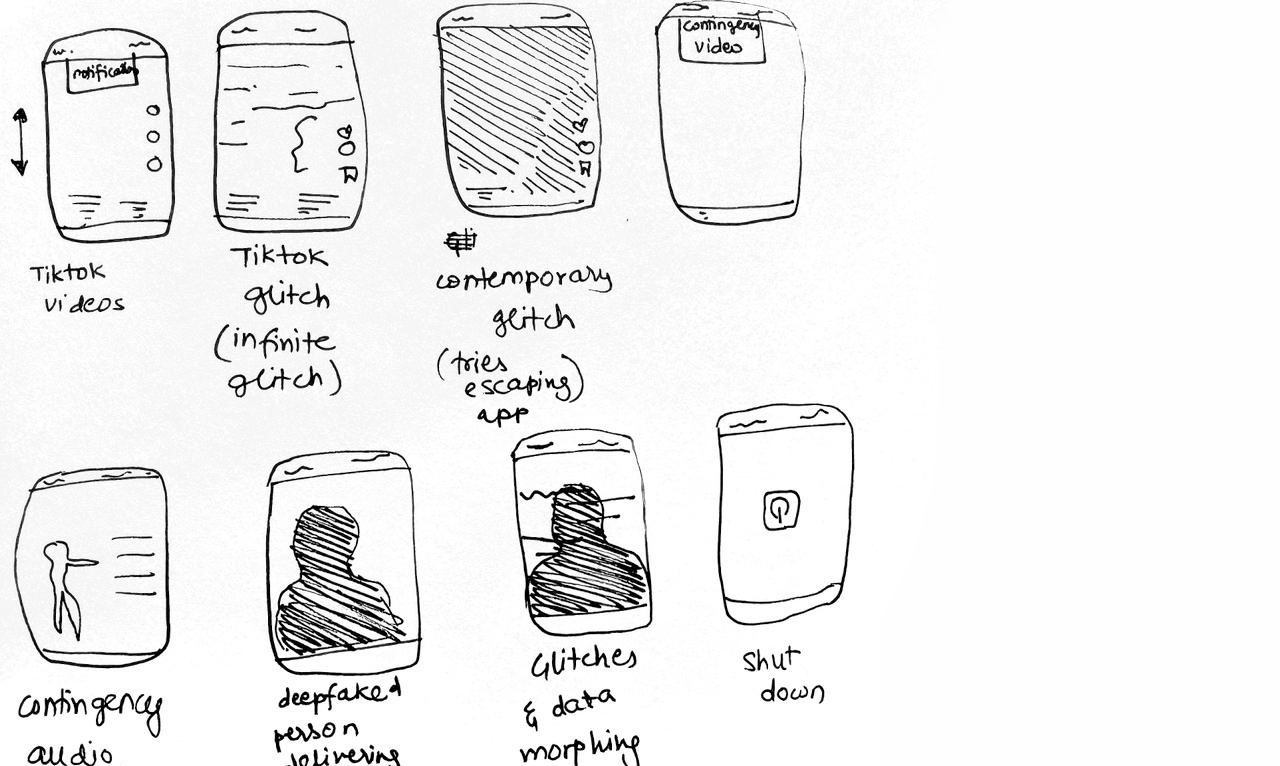

Our creative journey began with the idea of going from everyday routine components of existence to a sudden and chaotic crisis message. We were initially inspired by television, specifically the spooky atmosphere of local broadcasts similar to Local 58. (Refer to Storyboard 1)

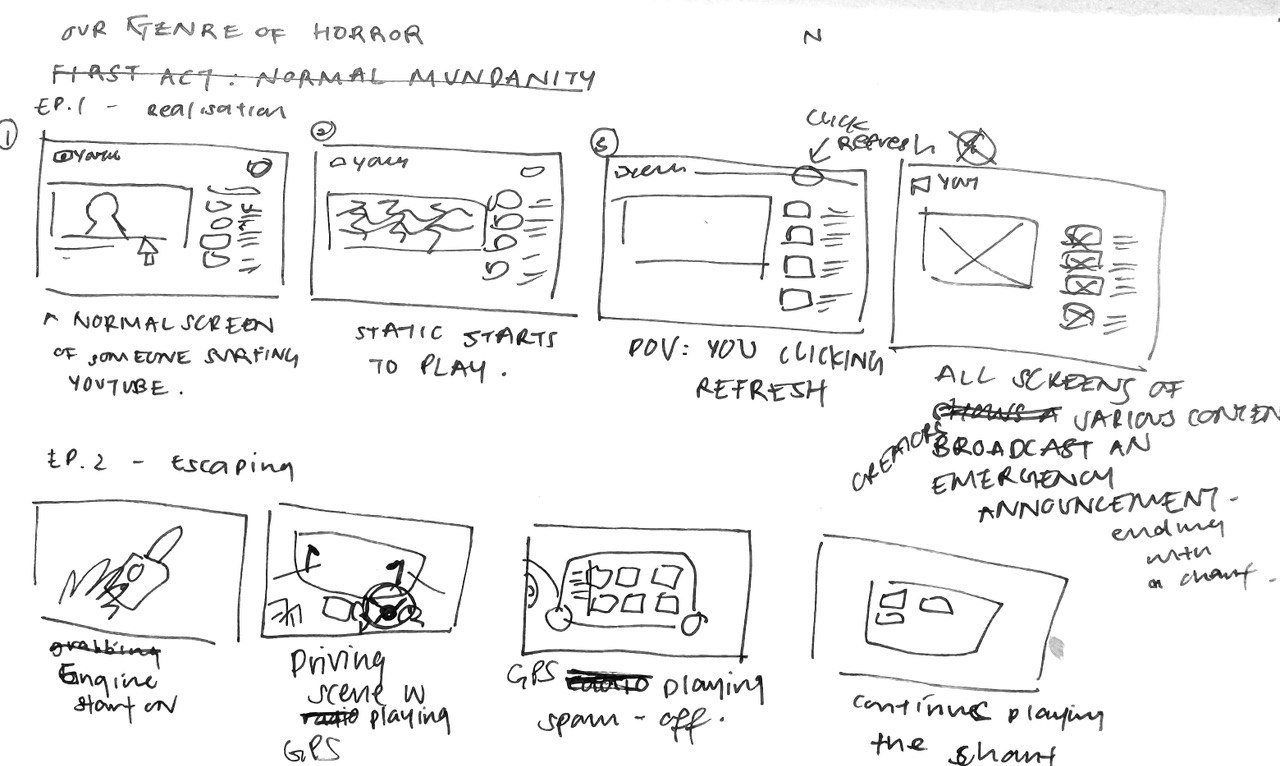

As our ideas grew, we turned our focus to YouTube, recognising its position as the most popular video site. (Refer to Storyboard 2) However, our final conclusion led us to a mobile interface, specifically TikTok. We chose TikTok because of its enormous popularity among Generation Z. (Refer to Storyboard 3) TikTok's relatability within this group enabled us to effectively tell our narrative and engage our target audience.

Storyboard 1

Storyboard 2

Storyboard 3

Deepfake Journey

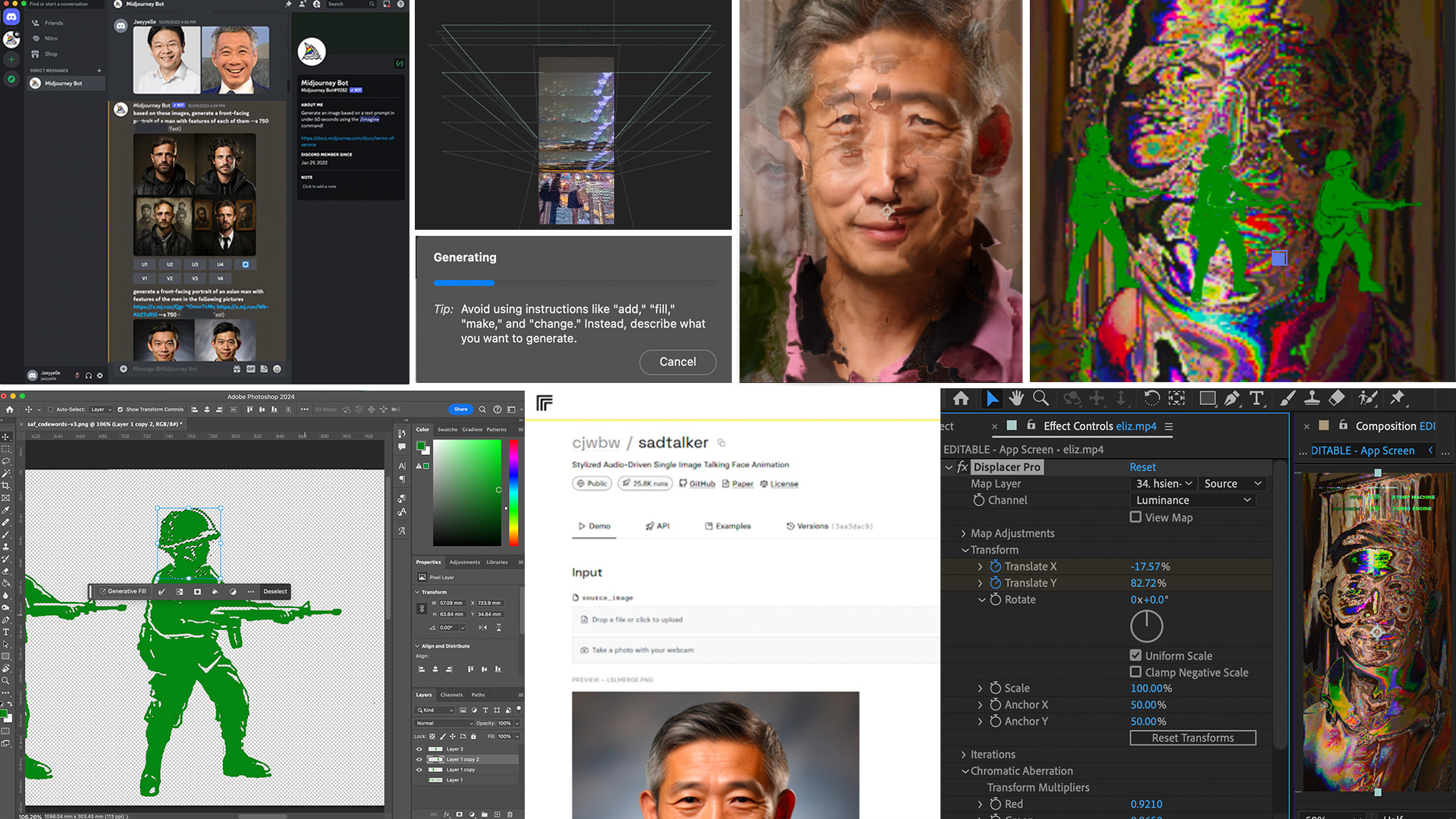

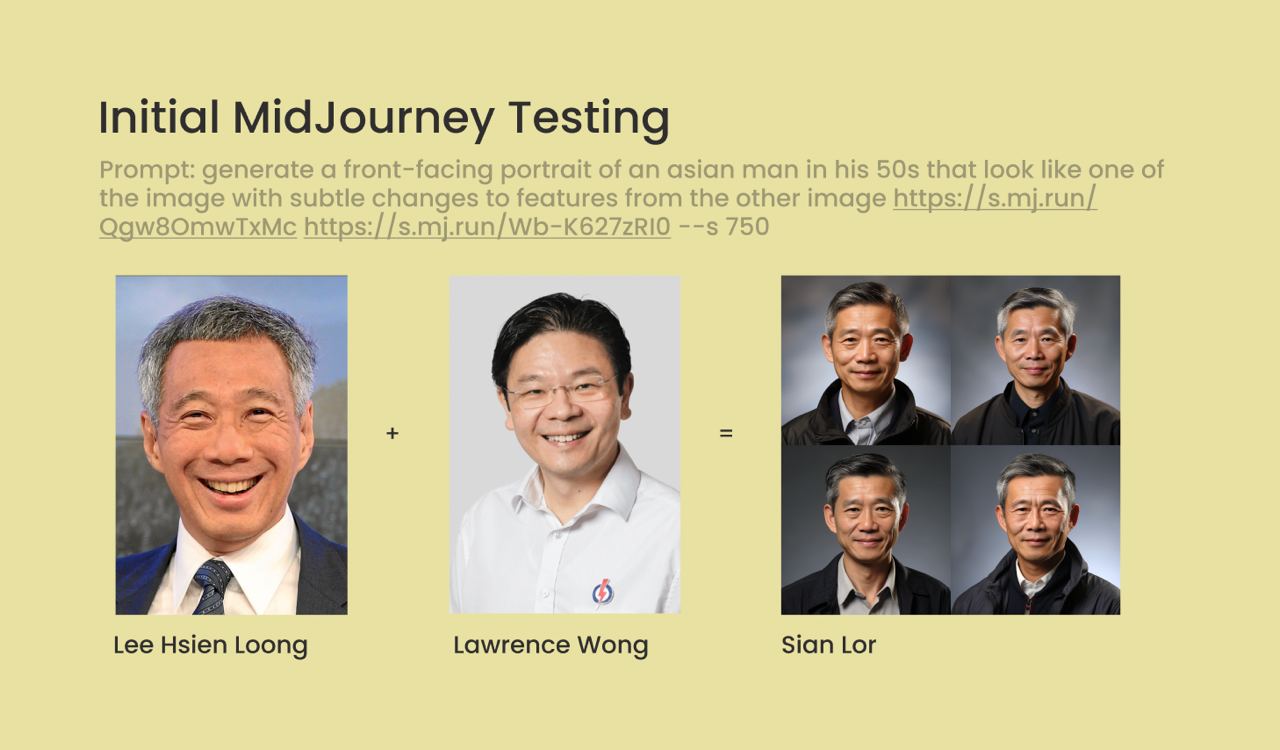

Midjourney

Our creative journey began with using Midjourney, a software that creates images through text prompts. And, of course, as we were inspired by the subgenre of TikTok, we tested out mixing of famous figures to indulge in our imagination. One notable imagination is the mix of Lee Hsien Loong and Lawrence Wong, which we named "Sian Lor". So, why did we imagine that? Well, if you know, you know.

ChatGPT

Following that, we delved into creating a narrative of a dystopian future where AI has taken over the human world. Utilising the ability of ChatGPT, we could mould a story that would harmoniously complement our graphics.

AI Voice Manipulation

ㅤ

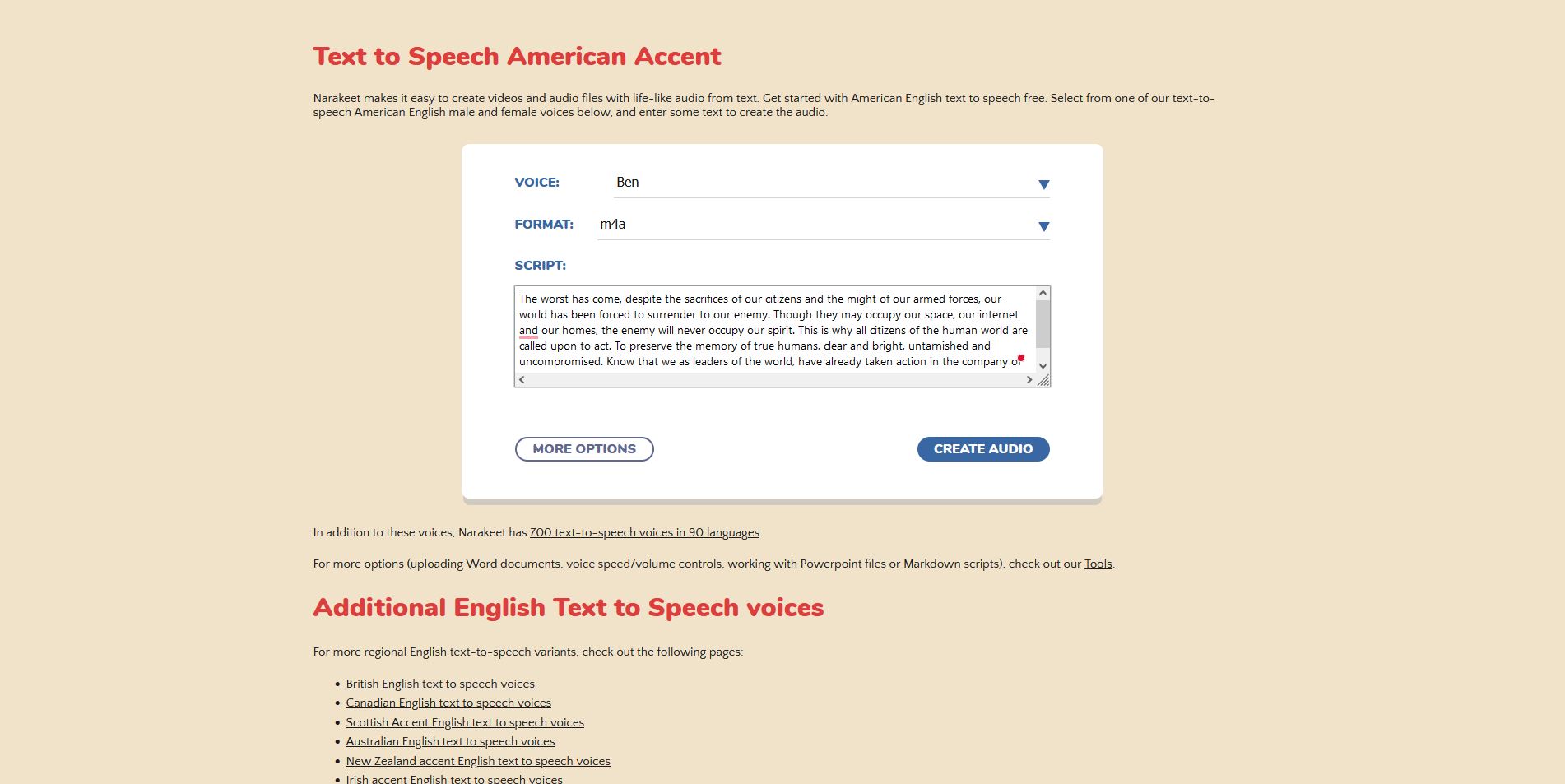

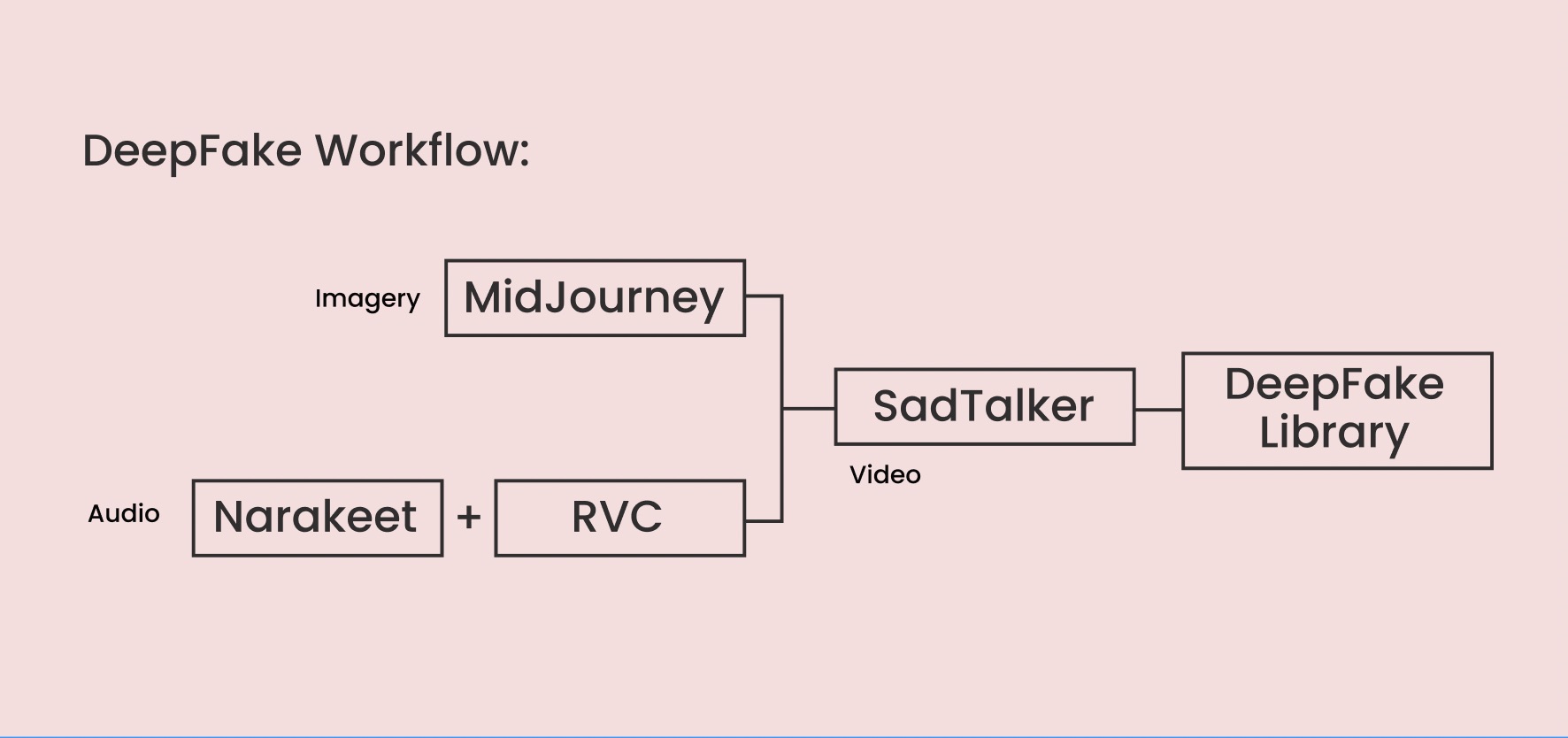

We first used Narakeet to turn text-to-speech to create narration for our video. (Refer to

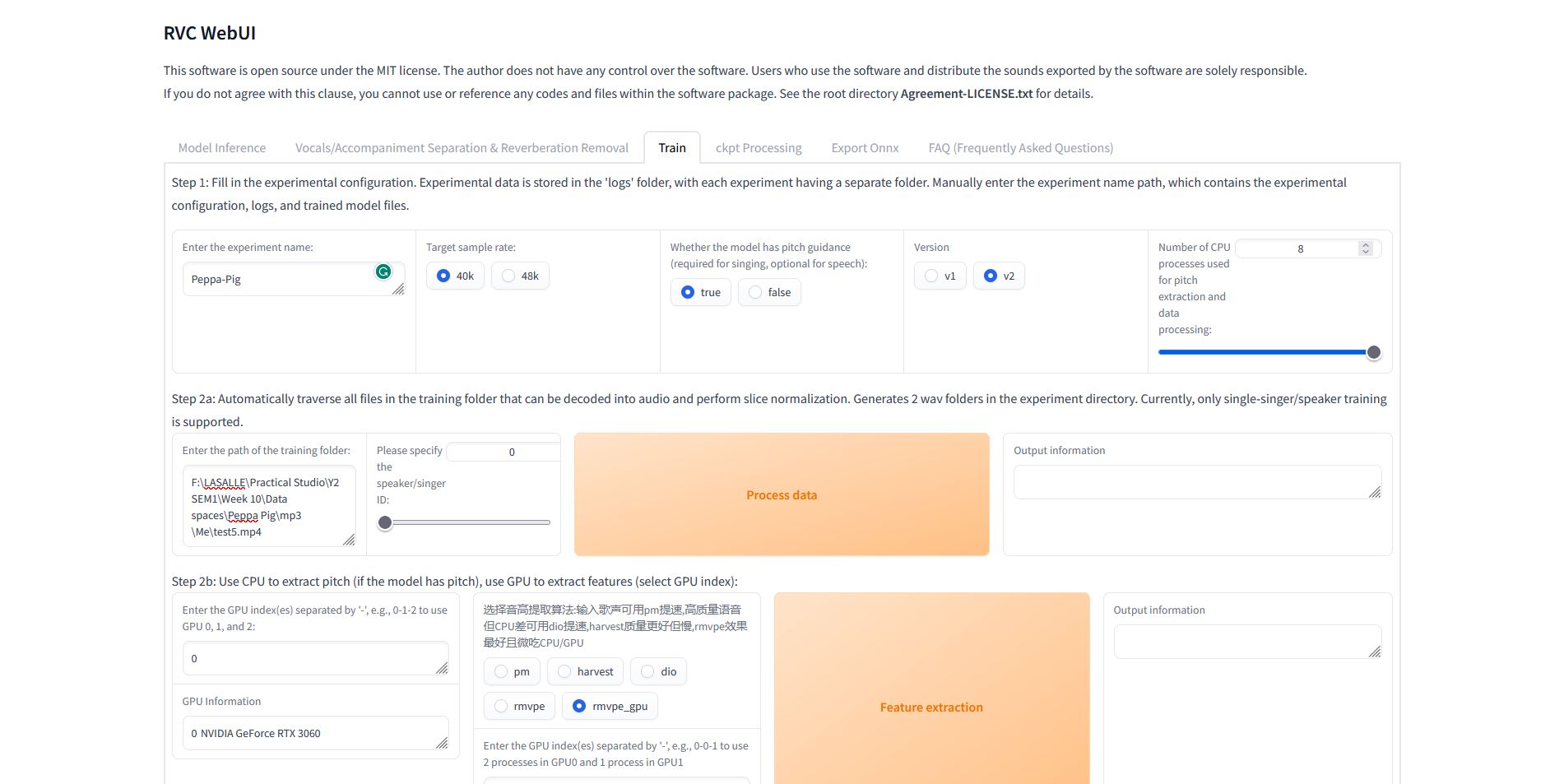

Audio 1) To give our product a more realistic feel, we used RVC software to clone the voices of famous

figures. One notable voice that we have cloned is Lee Hsien Loong's voice. (Refer to Audio 2) Using

audio of his existing speeches online, we could clone his voice to narrate a script for our

artefact.

RVC also allows us to control the voice's octaves, which helps create an eerie effect. (Refer to Audio

3)

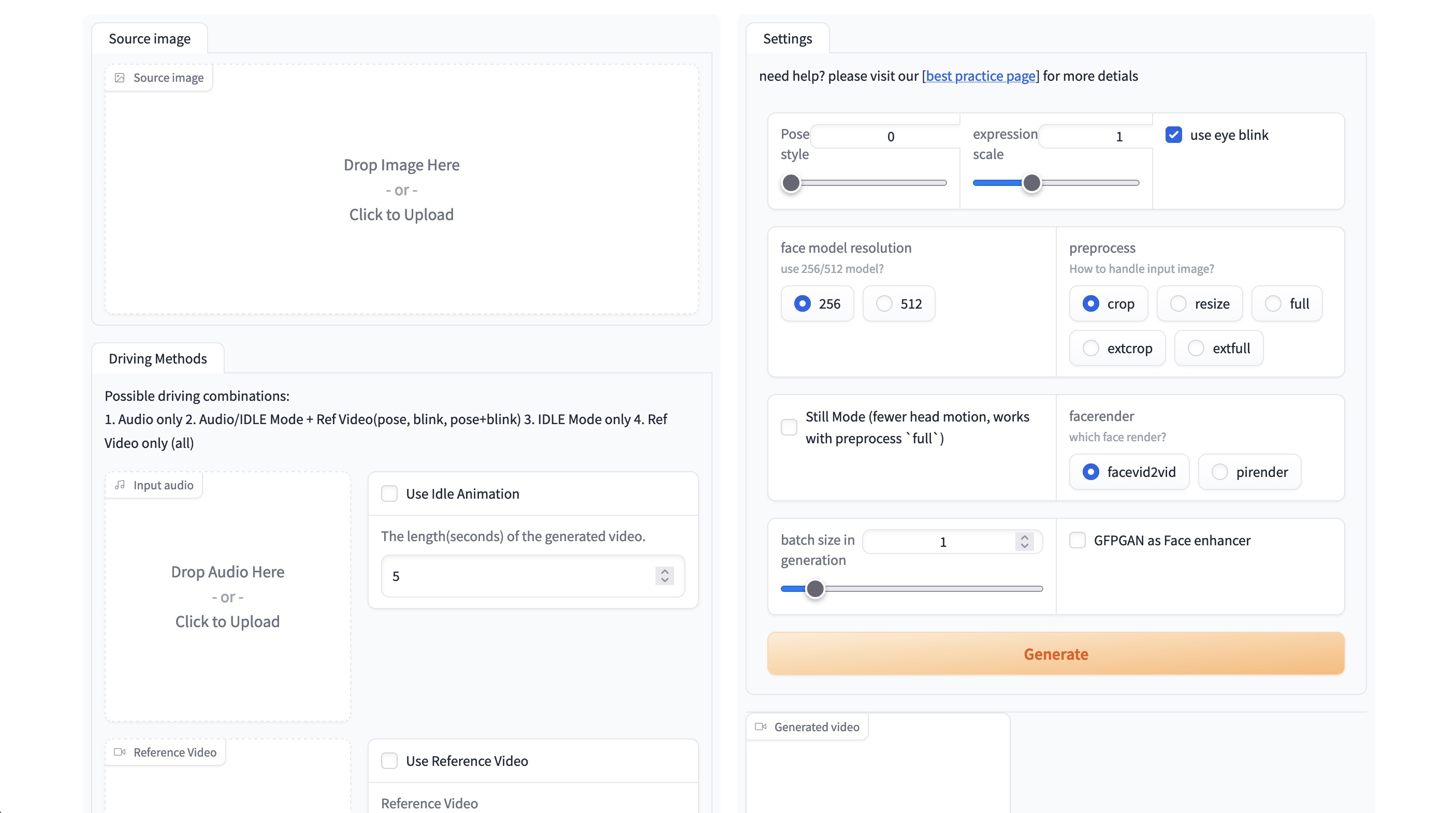

Sad Talker

The potion pot of our DeepFake Journey. (Refer to figure 1) SadTalker is a free online software that allows users to upload images and audio files separately to generate talking talking portraits/videos. With this workflow, we could create an array of assets to help us make our artefact. (Refer to figure 2)

ㅤ

ㅤ

-

Deepfake Workflow Overview

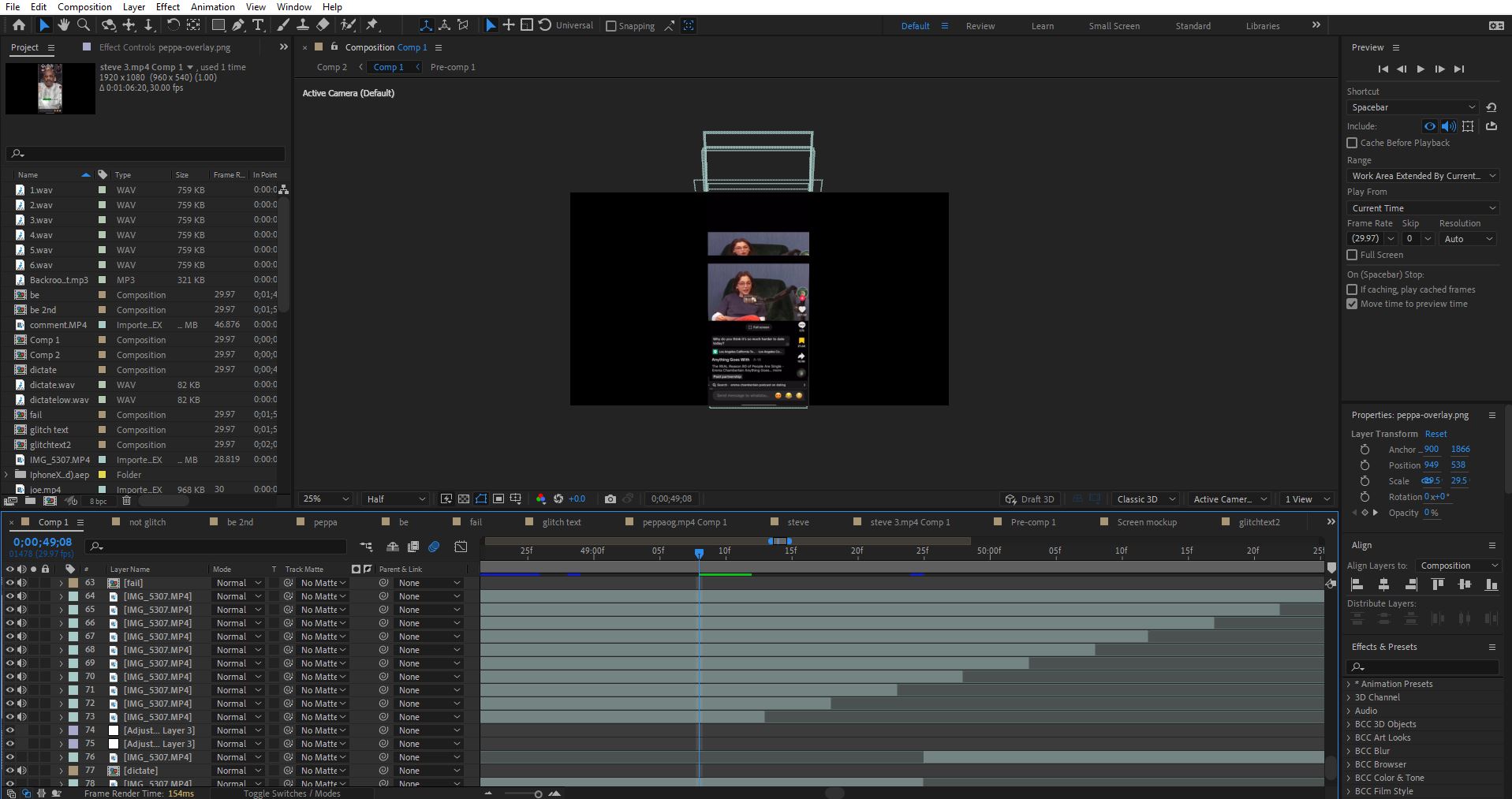

Video Editing: Effects

As we recognised that generating DeepFake with Sadtalker still contains unnatural clones, we delved into various video-editing techniques to weave the intended effect of horror into a video narrative. This was done by drawing inspiration from Analogue Horror and anchored with the concept of "gradual tension". Split into three components, we will be explaining how we achieved the eerieness by using (1)effects, (2)graphics and (3)sounds.

-

Act 1. TikTok -

Act 2. Intermission -

Act 3. Emergency Broadcasts

① Infinite Scrolling Glitch

The first act starts with a mundane act of browsing TikTok and gradually shows signs of interruption. We use the effects of Motion Tile, Modulation and Pixel Sorter to support the narrative. In the picture below, you see our research on the infinite scrolling glitch (Refer to Figure 1) and an example of an exploration of multiplying frames (Refer to Figure 2) to imagine an error in Tiktok's scrolling function. This technique helps us to convey an early sign of AI taking over what we see on the phone.

Figure 1. Research -

Figure 2. Infinite Scroll

② Datamoshing

Following the build-up, we introduce Datamoshing to imagine a total invasion of AI. These tools were critical in helping us create a visually compelling effect that keeps audiences on their edge. In the pictures below, we tested with various plugins such as Datamosh2 (Refer to Figure 3) before finalising Pixel Sort(Refer to Figure 4), Modulation(Refer to Figure 5) and Displacer Pro(Refer to Figure 6) on After Effects, as they provided better control over the gradual build-up.

-

Figure 3. Datamosh 2 -

Figure 4. Pixel Sorter

-

Figure 5. Modulation -

Figure 6. Displacer Pro

-

Figure 7. After Effects

Video Editing: Graphics

① Phone Notification

A significant motif that we employ is message notifications. Served as a counterpart to the narrative, it allows us to add a sense of humour through incoming messages from imagined friends and family. It also helped transition the video from Tiktok to an emergency broadcast.

② Phone UI

During the intermission and the final scene, we employ Phone UI graphics, specifically the Face ID (Refer to Figure 9), Detecting Location (Refer to Figure 10) and Phone Shutdown screen (Refer to Figure 11), to convey a sense of realism. It also helped with the pacing of the gradual build-up.

-

Figure 9. Facial ID -

Figure 10. Detecting Location -

Figure 11. Shutdown Screen

③ Local Media Imagery

The second half of the intermission relies on the "found footage" of a local contingency broadcast. By editing the footage, we could weave the video into the second act with a sense of realism.

-

Figure 12. Unedited Video -

Figure 13. Edited Video

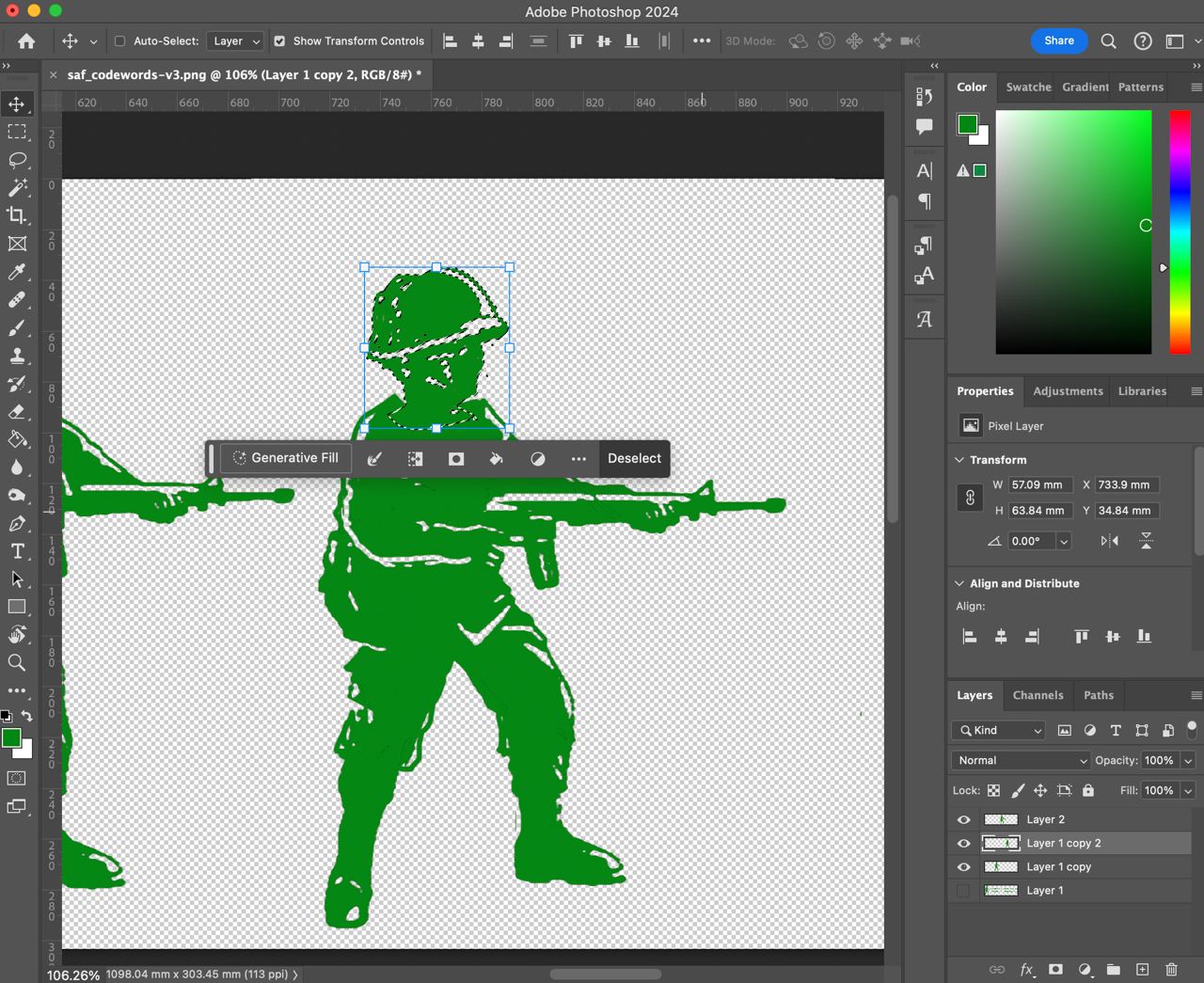

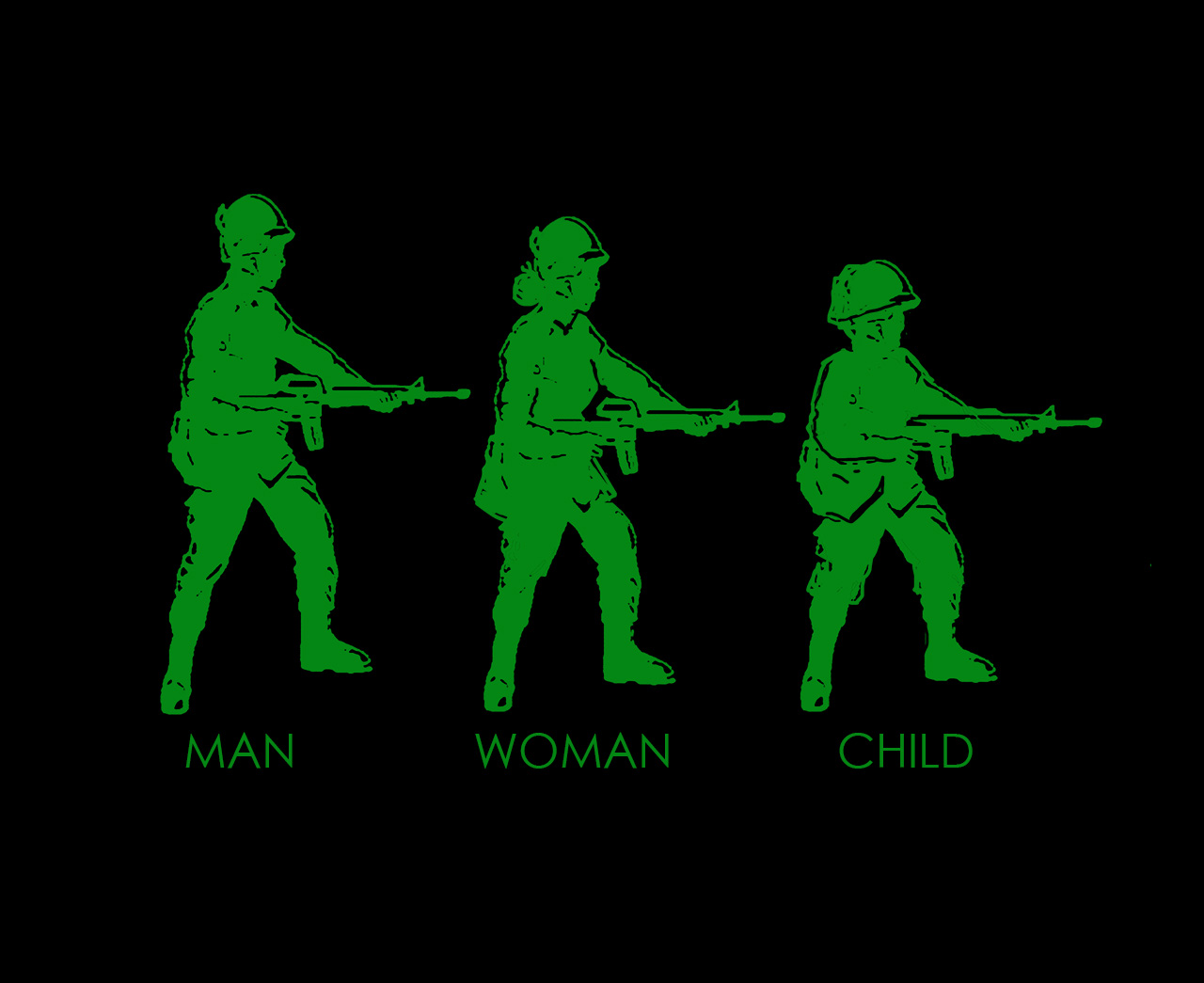

④ Military Mobilisation Icon

Another significant motif in the third act is the military mobilisation symbol. This graphic brings a localised context to add to the realism and supports the imagery of "Sian Lor". By manipulating the icon's silhouette (Refer to Figure 14), we created the 'women' and 'child' versions (Refer to Figure 15) to tie it back to the narration.

Video Editing: Sounds

① Phone Vibration

Building on Phone UI, we also introduced phone vibrations, which can be felt when the audience holds the phone. This sensation can also add to the immersive experience.

② Narration

Using the audio we generated from RVC, we stacked various pitches to create an eerie sound, specifically during the third act.

③ Background Track

A significant suspense component is the background track that gradually builds to a peak. This track helps us to tie the video together.

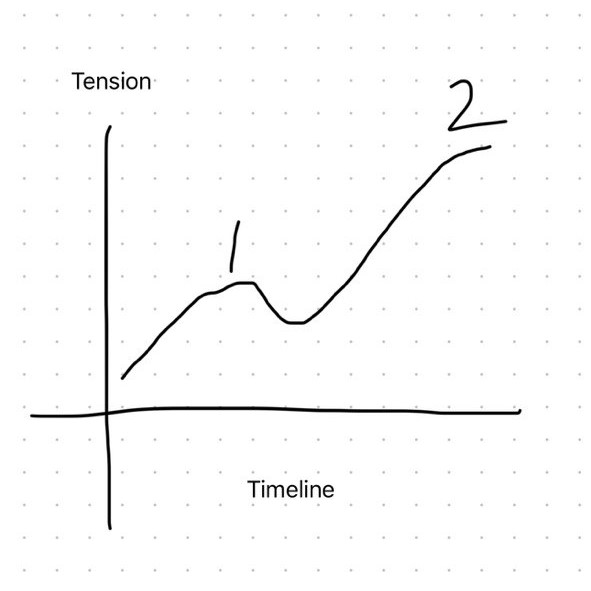

Split into two climaxes, the tension of the video narrative is mapped out in the line chart. (Refer to Figure 19) Altogether, the making of "Let's Be Friends" is a close study of a gradual build-up applying various techniques of effect, graphic and sound manipulation on our Deepfake library.

Experience

① Making of Booth

As a final touch, we wanted to give viewers and instructors an immersive experience when watching the video. We imagined our artefact to be seen in a semi-enclosed setting, inspired by the normal TikTok watching scenario, in which users frequently scroll through content in an enclosed space, especially at night. To make this vision a reality, we used the L-Shaped columns (Refer to Figure 20) in the classroom to create an environment similar to the TikTok scrolling experience. (Refer to Figure 21)

Figure 20. Carrying of L-Shaped Columns

Figure 21. Built Structure

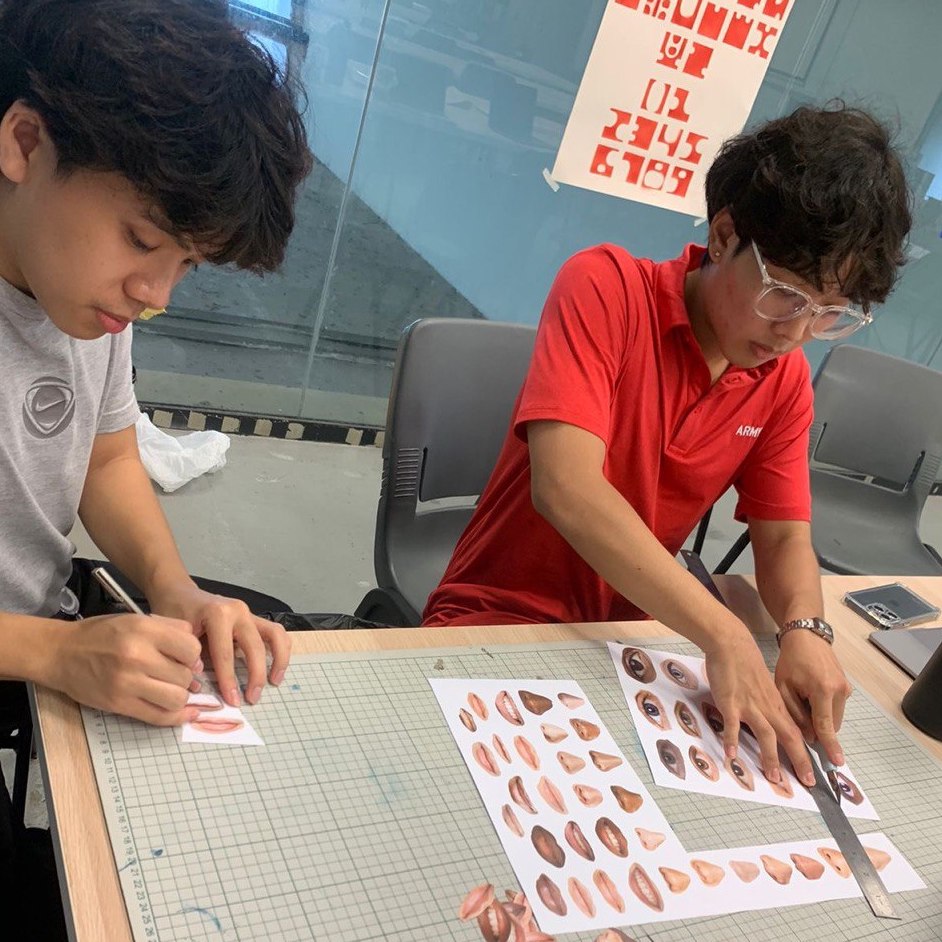

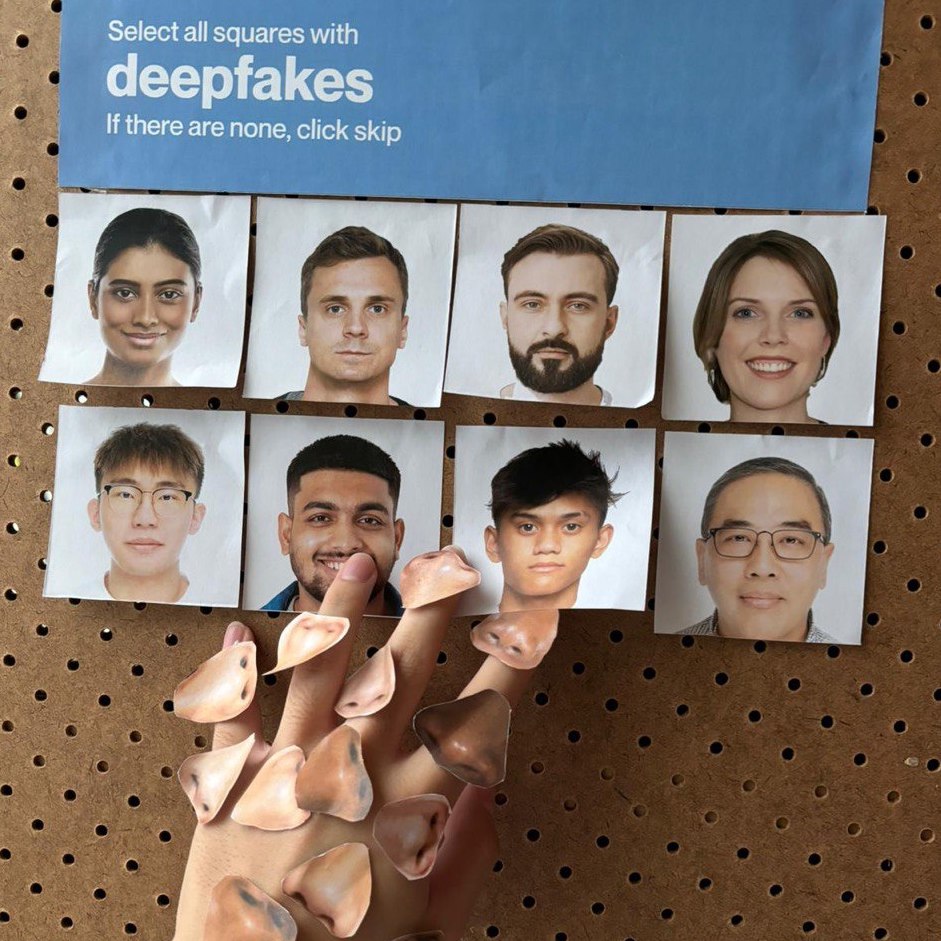

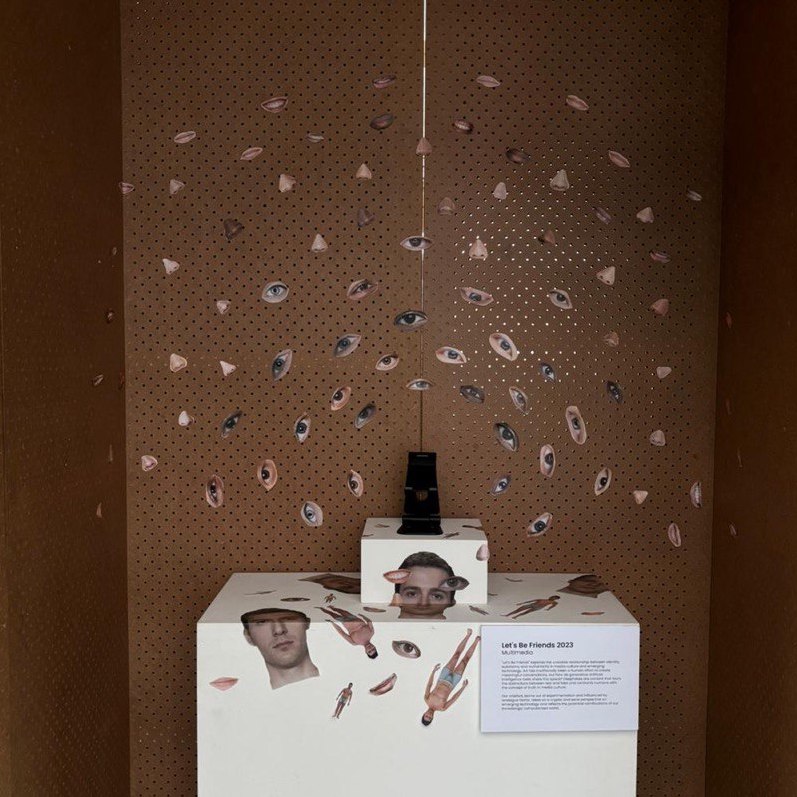

② Additional Collaterals

We wanted more than just an empty space with a phone. Following that, we included a recaptcha pop-up (Refer to Figure 24), similar to those found on websites, but this time it served as a verification step before entering the viewing booth. We inserted cutouts of unique facial features that are usually deepfaked (Refer to Figure 22), such as the eyes, nose, and mouth, inside the booth. (Refer to Figure 25) A stand alone was placed in the centre with a mobile stand and the mobile device, providing a thorough and engaging experience for individuals interacting with our artefact.

Figure 22. Cutting Eyes, Nose and Lips

Figure 23. Pasting Eyes, Nose and Lips

Figure 24. Installing Re-Captcha Poster

Figure 25. Completed Booth Decoration